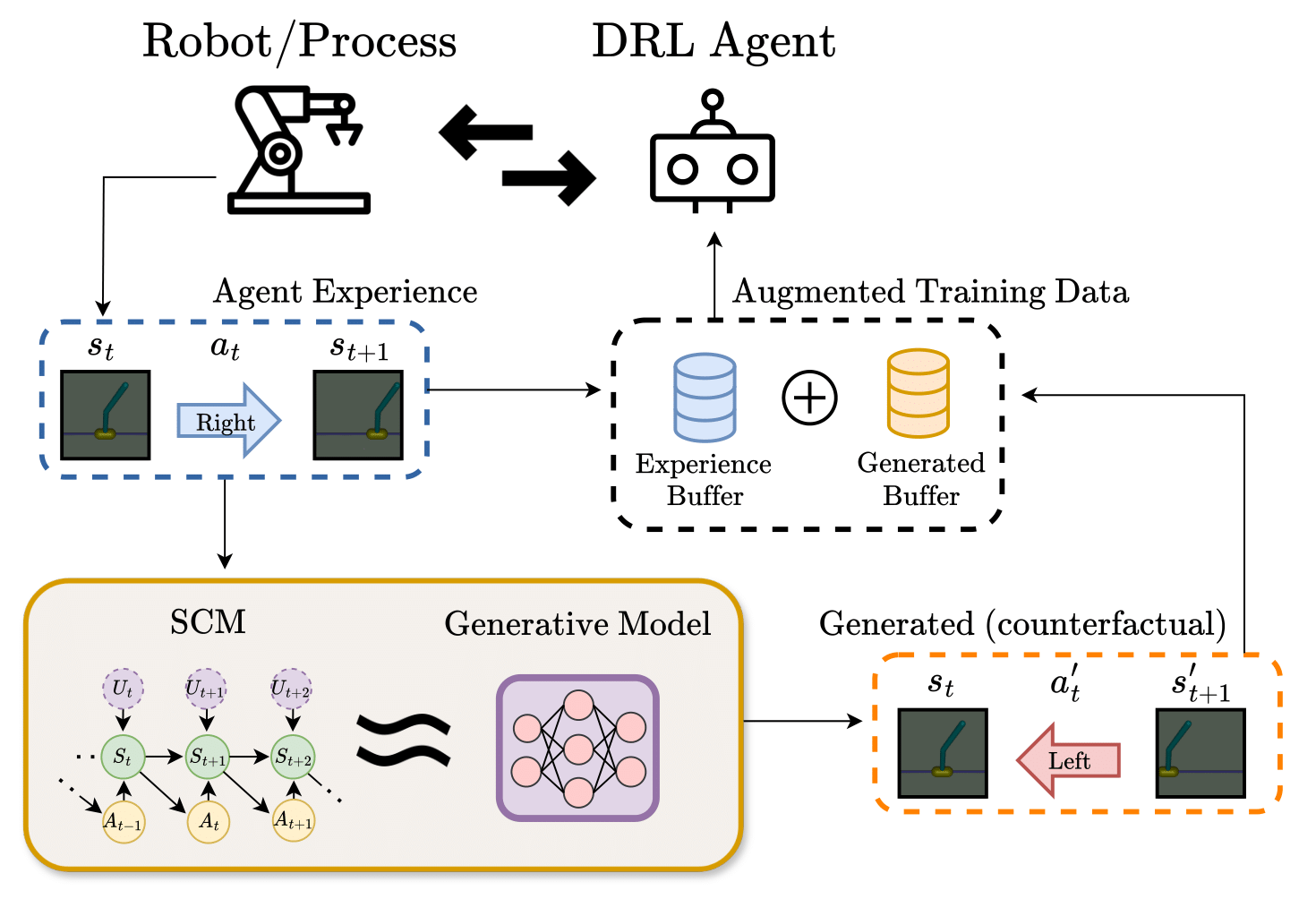

Abstract: This thesis addresses the challenges of data scarcity and noisy datasets in machine learning, with a focus on offline deep reinforcement learning. We introduce two novel frameworks for counterfactual data generation: WRe-CTDG and S-CTDG. These methods aim to augment pre-collected datasets by generating additional high-fidelity experiences that align with the environment’s underlying transition dynamics. We evaluate our frameworks across various environments, comparing their performance against non-augmented baselines. Results demonstrate significant improvements in reinforcement learning performance, particularly for S-CTDG in complex environments, at the same time identifying important trade-offs regarding its applicability. This work contributes to the integration of causal inference and machine learning, offering new approaches to leverage causal relationships in data augmentation for offline deep reinforcement learning. Keywords: Deep Reinforcement Learning, Causal Inference

Repository: GitHub Master Student: Paolo Speziali

Supervisor: Gabriele Costante

Co-supervisor: Raffaele Brilli |